HN coverage of my sigmoid function notebook, working on a logistic regression implementation

I submitted my notebook about the sigmoid function to hacker news this morning and was pleasantly surprised to see it picked up and make the front page. The feedback in the comments was mixed, but after getting past some nerd snark, there were a few nuggets I could learn from including this comment about how applying the log odds function to Bayes' theorem can be useful, will need to dig in a bit to fully grok. I was also pleased by this comment which pointed out that the intuitive justification for logistic regression presented is similar to that provided in the excellent Advanced Data Analysis from an Elementary Point of View (and more direct link to chapter on logistic regression).

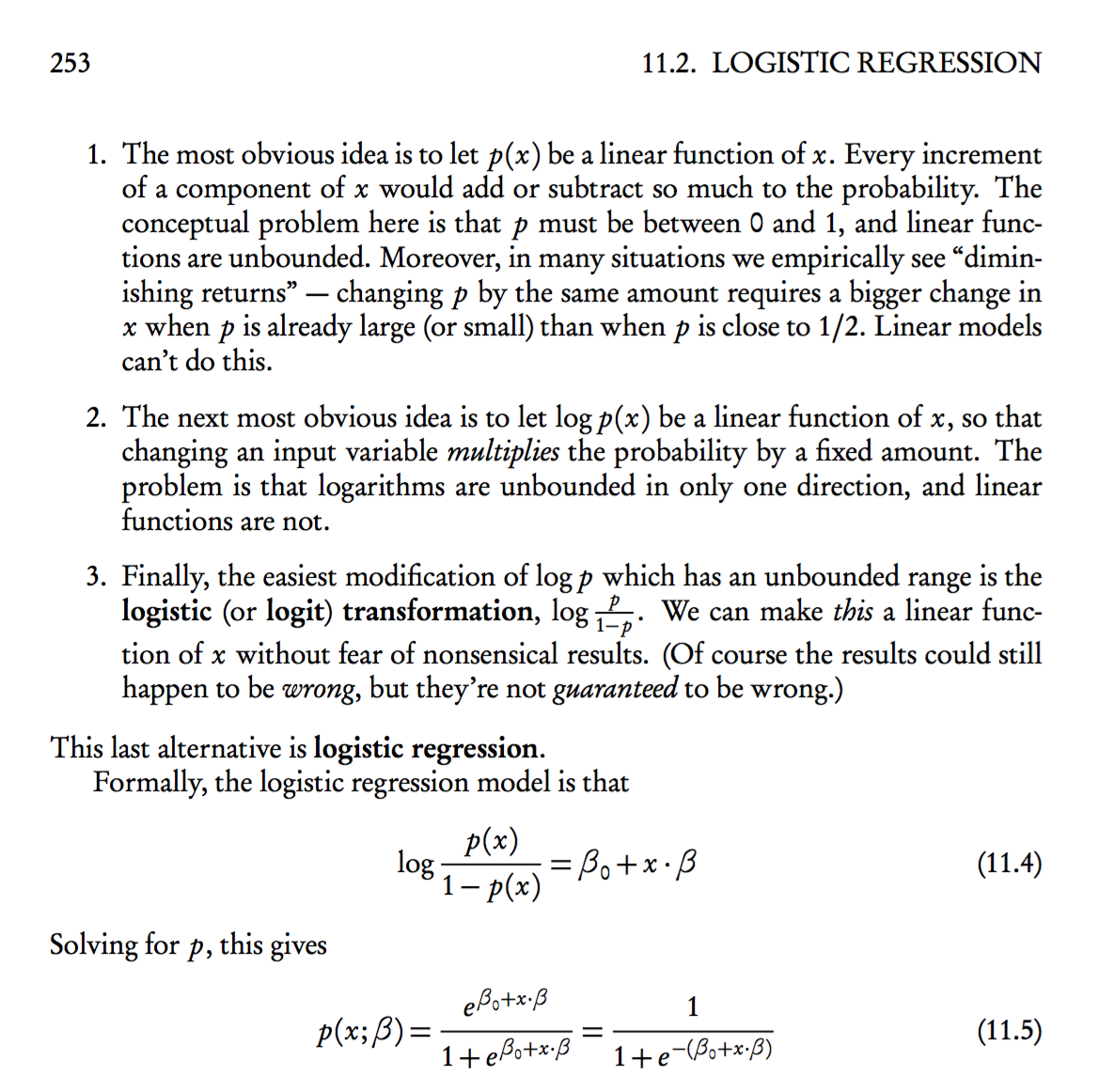

On a related not, I am back into the Python ML book and am taking some time to implement logistic regression from scratch. The book presents some theory behind the cost function that is optimized,

To explain how we can derive the cost function for logistic regression, let's first derive the likelihood L that we want to maximize when we build a logistic regression model, assuming that the individual samples in our dataset are independent of one another.

And presents a hairy formula without further explanation. Thankfully that same chapter from the Advanced Data Analysis book mentioned above goes into a bit more detail.

The implementation should only require some modest updates to the cost function that I used in my adeline implementation from chapter 2. The author has also provided a bonus notebook on github implementing logistic regression that I will avoid peeking at until I get mine to work.