Practice vectorizing numpy operations, and why KNN stinks at image classification

I'm working my way cs231n, Stanford's class on convolutional neural networks. The first assignment sets the stage by having you implement a k-nearest neighbor classifier by hand.

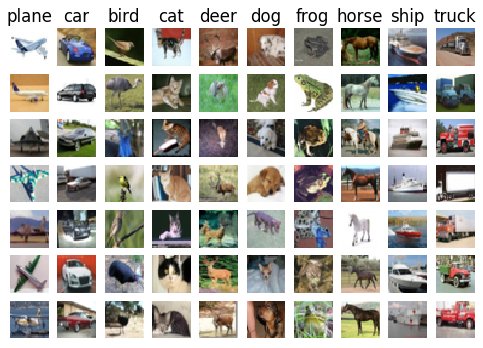

This is a nice warm-up as it gets you setup with the framework for image classification: train on a series of labeled images, and then predict the class of a new set of test images.

Reviewing vectorization

The first thing that was useful about this was simply implementing KNN by hand, first without any help from numpy's vectorizing methods, then help along one dimension, and finally figuring out how to do it without any loops at all. I spent some time reviewing universal functions and broadcasting, which come in handy when re-implementing the distance matrix calculation.

Why KNN stinks at image classifcation

It's useful to see that applying a nearest neighbor approach to image classification is not effective, and it makes sense: if you are doing a pixel-wise distance comparison between two images, it will be terribly sensitive to any translation of image features; imagine the exact same image having a distance of zero to itself, but then a large distance to a version of itself shifted over by a few pixels.

This motivates the need for the translation-invariant nature of features learned by convolutional layers.

I'm keeping my assignments in a private bitbucket repo out of respect for what might be a desire on stanford's part to keep the solutions private, but it might turn out this is overly cautious.